Most people assume the hardest part of making a song is the technical production—chords, mixing, mastering. In practice, the hardest part is often simpler: getting from a written idea to an audible direction you can commit to. If you already have lyrics, a poem, a short chorus concept, or even a branded jingle draft, you’re not starting from zero. You’re starting from a narrative. What you need is a way to translate that narrative into melody, dynamics, and structure without losing weeks to tooling or searching.

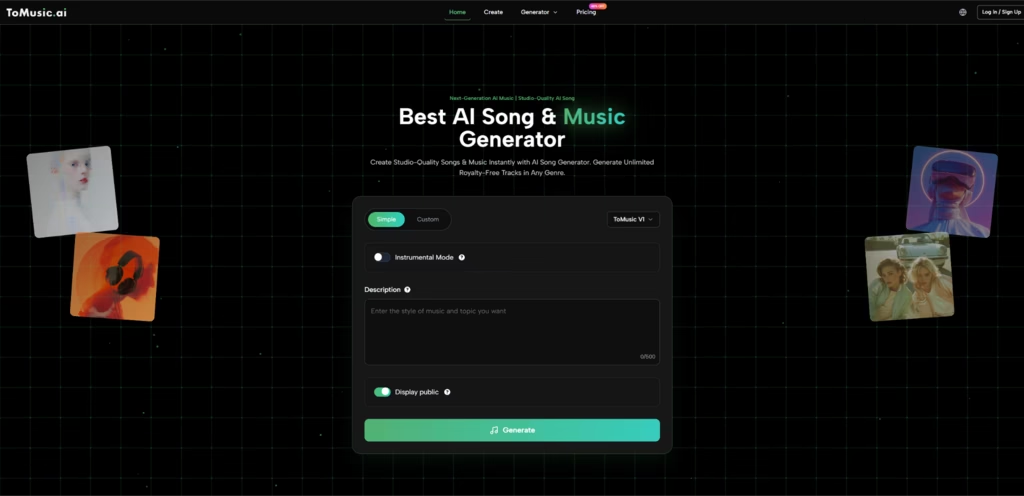

That’s why I’ve come to like a “script-to-soundtrack” mindset. In my own testing, the AI Music Generator workflow felt less like pressing a button and more like running quick auditions: you supply the blueprint (lyrics + structure), generate a few interpretations, then refine until the sound matches the emotional intent behind the words.

The Core Idea: Lyrics Act Like Creative Constraints

A typical text prompt is open-ended. Lyrics are not. Lyrics already contain:

- Cadence (short lines feel punchy; long lines feel narrative)

- Emphasis (what repeats becomes the hook)

- Emotional arc (verses explain; choruses resolve)

- Natural sections (even if you didn’t label them)

In other words, lyrics function like a set of constraints that guides the music toward something coherent. In my tests, that made outputs feel more “purpose-built,” especially for chorus-driven tracks.

A Different Framework: Build a “Song Spec” Before You Generate

Instead of writing a long prompt, I had better results by writing a song specification—a short checklist that forces clarity.

A simple Song Spec

- Use case: reel, podcast intro, product page, full song

- Vocal intent: none / light / prominent

- Energy curve: steady / gradual build / big lift at chorus

- Genre anchor: one main style (avoid too many hybrids)

- Two moods: pick only two (e.g., “nostalgic + hopeful”)

This is less creative writing and more decision-making. Once the spec is clear, your generations tend to become more consistent—and iteration becomes easier.

The “Two Drafts + One Edit” Method

Here’s the structure I used when I wanted speed without chaos.

Draft A — Make it clean

- Choose a straightforward style direction.

- Keep instrumentation hints minimal.

- Prioritize clarity over complexity.

Draft B — Make it expressive

- Same lyrics, but shift mood or tempo slightly.

- Introduce one distinctive texture (e.g., “dreamy synth pads” or “acoustic guitar”).

One Edit — Adjust a single lever

Pick the better draft and change only one thing:

- tempo (slower/faster)

- mood (warmer/darker)

- vocal style (airier/stronger)

- arrangement density (minimal/full)

In my testing, this method produced better progress than generating ten random variations, because it forced the iterations to be intentional.

Structure Is the Shortcut to “Real Song Energy”

If you want the output to feel like a song rather than background audio, structure is your best friend.

A reliable structure template

- Intro

- Verse

- Chorus

- Verse

- Chorus

- Bridge

- Chorus

- Outro

Small lyric choices that improved results

- Keep chorus lines shorter and simpler than verse lines.

- Repeat the hook phrase exactly (don’t reword it).

- Avoid overly dense phrasing where you want clear vocal delivery.

This doesn’t require “perfect lyrics.” It requires clean intent.

How to Write Style Guidance Without Overloading It

Even when lyrics lead the way, style guidance matters. The trick is keeping it disciplined.

A stable style line

Genre + two moods + energy/tempo + two textures

Example:

“Alt-pop, nostalgic and bright, mid-tempo, clean drums, warm bass, airy vocal tone.”

When I added too many textures or stacked multiple genres, outputs often felt indecisive—like they were trying to satisfy conflicting instructions.

Where This Fits in a Real Creator Workflow

If you’re deciding between this and traditional approaches, it helps to compare how you work, not just what you can theoretically do.

| Comparison Item | Lyrics-led Generation Workflow | Stock Music Libraries | Traditional Production |

| Starting point | Words + structure | Searching by tags | Musical skills + time |

| Time to first usable draft | Fast | Medium | Slow |

| Can it follow your message? | High | Low–Medium | Very High |

| Iteration speed | High | Medium | Low–Medium |

| Best for | creators with lyrics or scripts | safe background picks | maximum control |

If you publish often, speed-to-direction is usually the difference between finishing and stalling.

Limitations That Make It Feel More Real

I don’t think it’s helpful to pretend this is effortless. In my experience:

What can vary

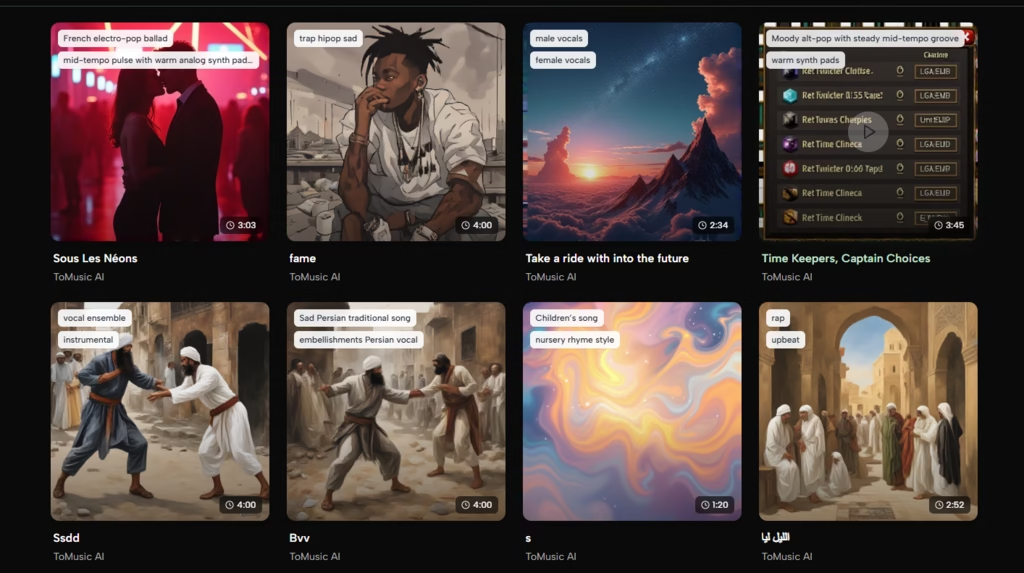

- Some generations nail the vibe; others miss even with similar inputs.

- Vocal clarity can shift depending on line length and word density.

- Results may require multiple attempts to land on the “right” take.

What helped most

- Generate 2–3 drafts first, then choose direction.

- Simplify: one genre anchor, two moods, two textures.

- Edit chorus lines to be shorter if vocals feel unclear.

- Iterate one variable at a time.

This turns the process from “lottery” into “creative feedback loop.”

Read More: The Comprehensive Guide to Creating Chart-Ready Tracks with AI

A More Trustworthy Way to Think About Output Quality

The best framing I found is: you’re not buying a perfect song instantly—you’re buying a faster loop for testing ideas. You still make the calls:

- does the chorus lift at the right moment?

- does the mood match your visuals or brand tone?

- does the track compete with narration?

When you treat the output as a draft you can steer, the workflow becomes genuinely useful.

A 10–15 Minute Starter Routine

- Paste lyrics with a clear Verse/Chorus structure.

- Add one style line: genre + two moods + tempo/energy + two textures.

- Generate two drafts: one clean, one expressive.

- Pick the better draft and change one lever only.

- Test it under your real edit before regenerating again.

Used this way, lyrics to music ai becomes a practical bridge between written intent and finished sound—especially when your goal is to ship creative work consistently, not chase a mythical perfect first take.